Long and Short Memory Balancing in Visual Co-Tracking using Q-Learning

ICIP'2019, Taipei, Taiwan, Sep 22-Sep 25, 2019

Employing one or more additional classifiers to break the self-learning loop in tracing-by-detection has gained considerable attention. Most of such trackers merely utilize the redundancy to address the accumulating label error in the tracking loop, and suffer from high computational complexity as well as tracking challenges that may interrupt all classifiers (e.g. temporal occlusions). We propose the active co-tracking framework, in which the main classifier of the tracker labels samples of video sequence, and only consults auxiliary classifier when it is uncertain. Based on the source of the uncertainty and the differences of two classifiers (e.g. accuracy, speed, update frequency, etc.), different policies should be taken to exchange the information between two classifiers. Here, we introduce a reinforcement learning approach to find the appropriate policy by considering the state of the tracker in a specific sequence. The proposed method yields promising results in comparison to the best tracking-by-detection approaches.

Overview

A classifier is not always certain about the output labels. Whether it is inefficient features for certain input images, insufficient model complexity to separate some of the samples, lack of proper training data, missing information in the input data (e.g., due to occlusion), or technically speaking, having an input sample that falls very close to decision boundary of the classifier, hampers the classifier ability to be sure about its label and increase the risk of misclassification. Especially in the case of online learning, novel appearances of the target, background distractors, and non-stationarity of the label distribution1 promotes the uncertainty of the classifier.

Here, we take advantage of the information about classifier’s uncertainty state in a scenario, to control the information exchange between the main classifier of the tracker, and a more accurate yet slow auxiliary classifier in the co-tracking framework. To cope with rapid target changes and handling challenges such as temporal target deformations and occlusion, we set different memory span and update frequency for classifiers. Naturally, the main classifier is selected to an agile, plastic, easily-updatable and frequently-updating model whereas the auxiliary tracker is more sophisticated (accurate yet slow), more stable (memorizing all labels in the tracker’s history), and less-frequently updated. The main classifier only queries a label from the auxiliary one, when it is uncertain about a sample’s label in line with the uncertainty sampling. We proposed a Q-learning approach to govern the information exchange between two classifiers w.r.t. the uncertainty state of the first classifier. This scheme automatically balances the stability-plasticity trade-off in tracking and long-short memory trade-off while increasing the speed of the tracker (by avoiding unnecessary queries from the slow classifier) and enhances the generalization ability of the first classifier (by advantaging from the benefits of active learning). The proposed tracker performs better than many of the state-of-the-art in tracking-by-detection.

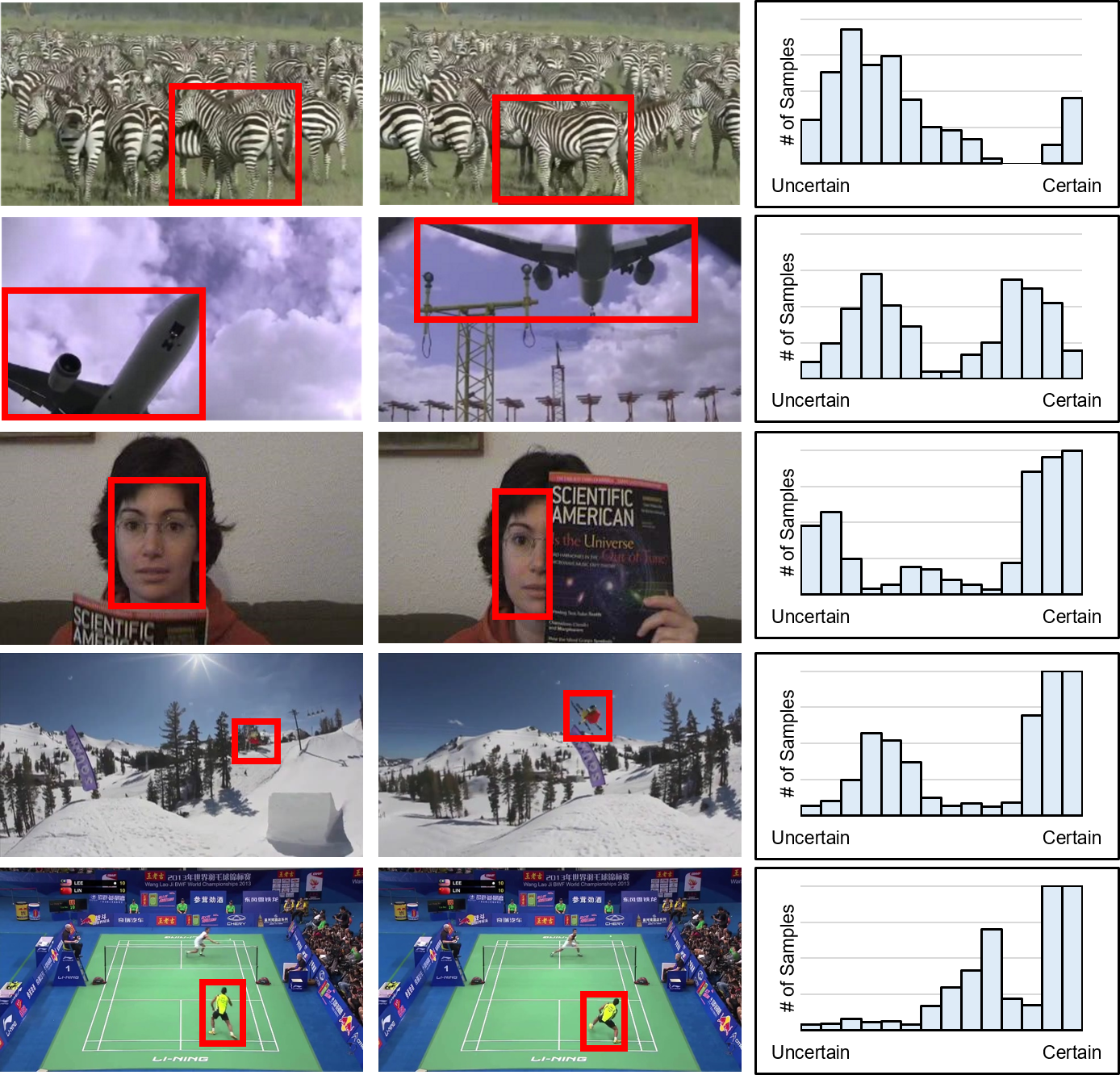

Consider a classifier of tracking-by-detection that uses color and shape features and is trained on video frames leading to the frame on the left column. When classifying samples from the frame in the middle column, the uncertainty for all samples may have different trends, as plotted in the uncertainty histogram in right panels. The histogram may be skewed toward certainty, uncertainty (e.g. due to feature failures or occlusion), bimodal (where usually background is easy to separate but the foreground is ambiguous), etc. In co-tracking frameworks, various patterns of uncertainty require different policies to enhance tracking performance.

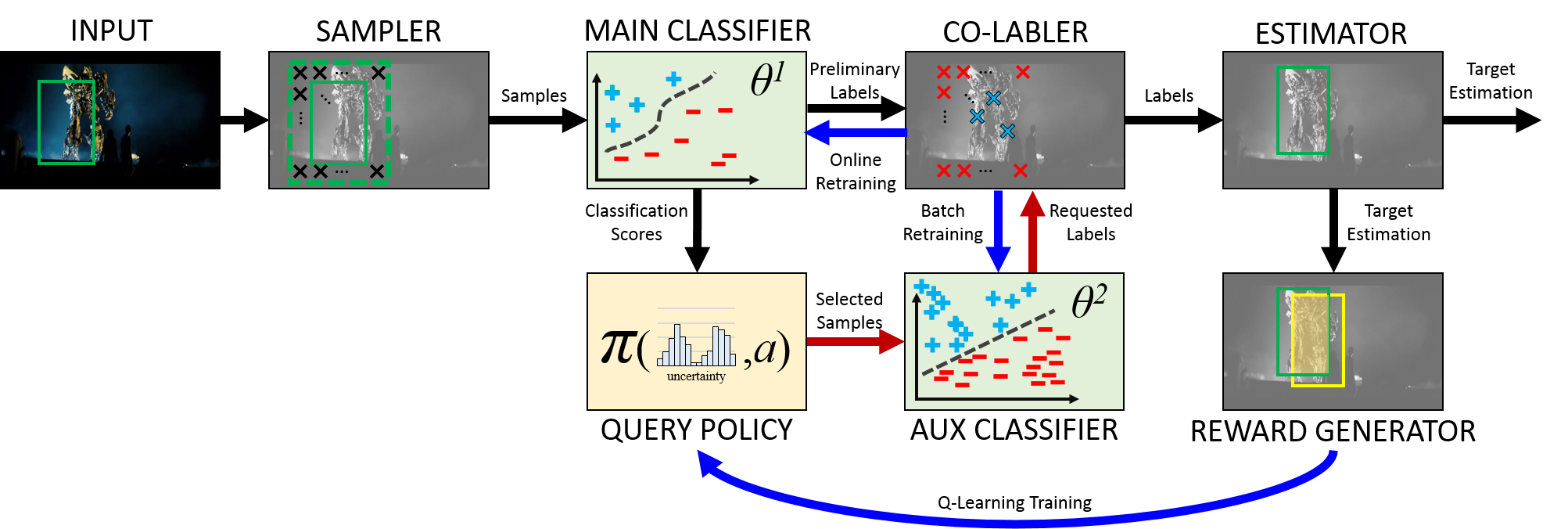

The proposed tracker, collect samples from a pre-defined area around the last known target location. The classification scores are fed to query the policy unit which selects the best value for uncertainty margin hyperparameter. If needed, the aux classifier is queried for the label of the samples. The classifiers are then updated using co-labeled samples and the target state is estimated. In the training phase, the target estimation is compared to the ground truth and their normalized intersection is used as a reward to train the query policy Q-table.

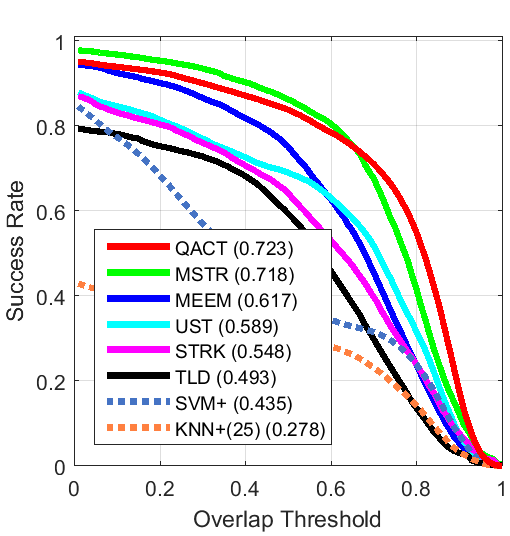

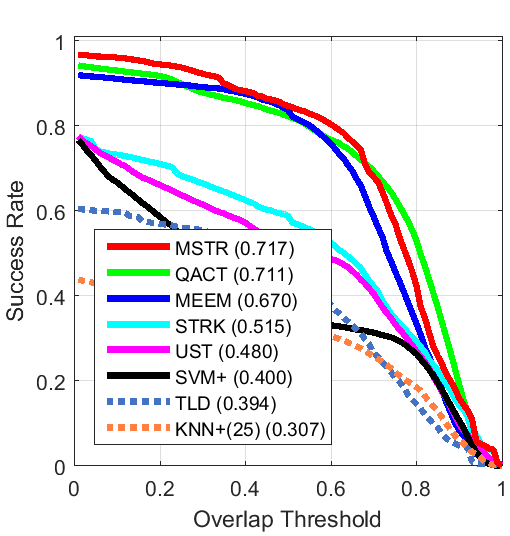

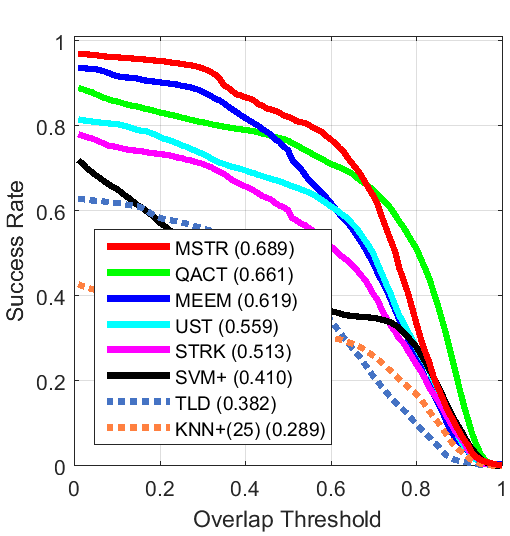

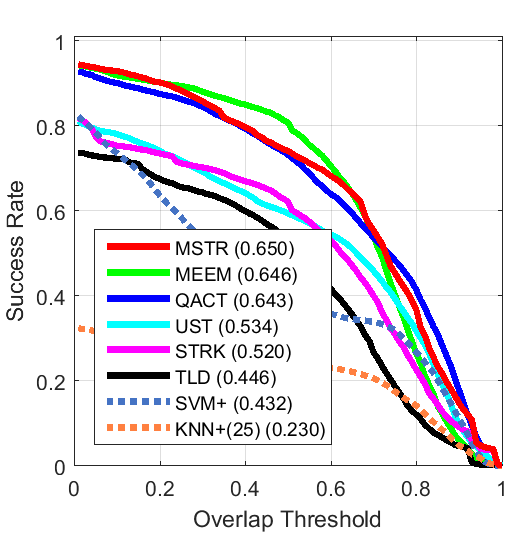

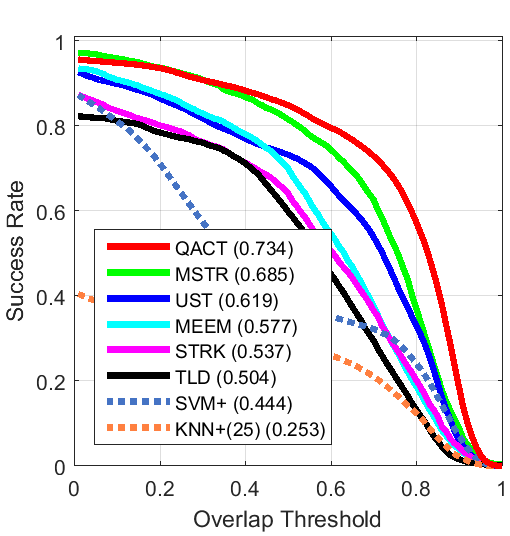

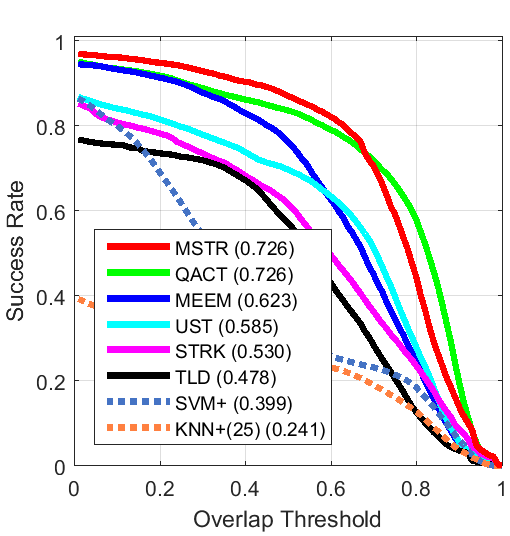

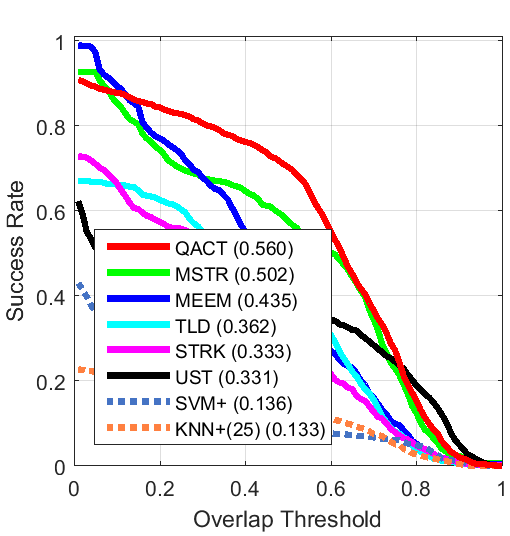

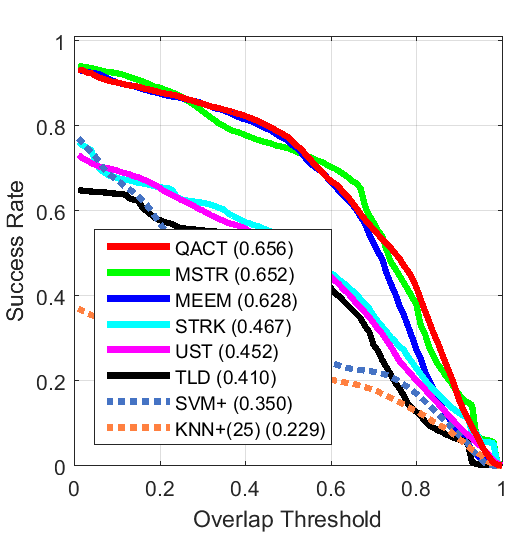

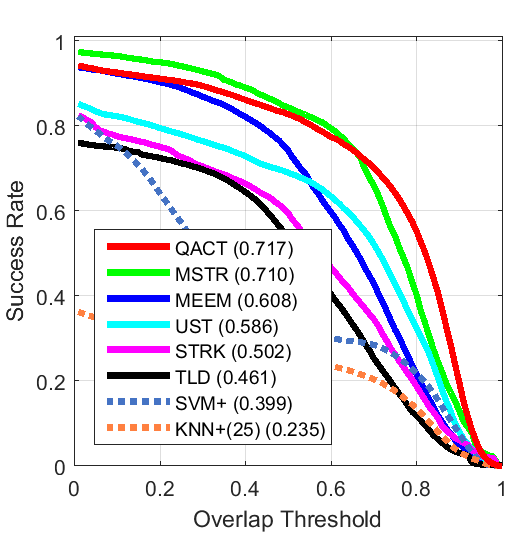

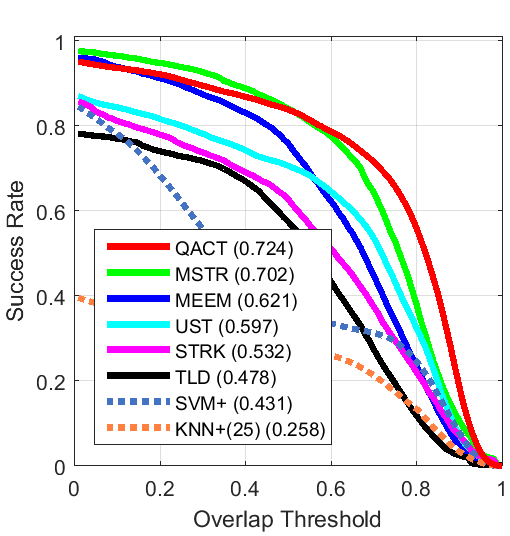

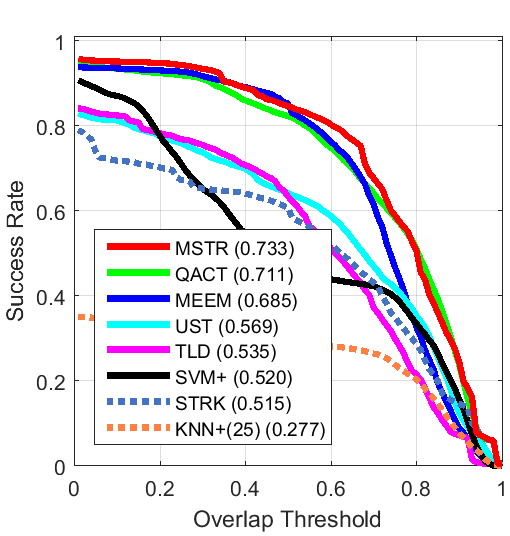

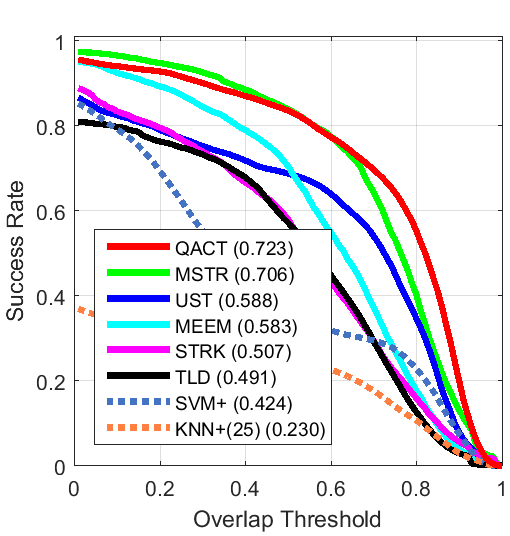

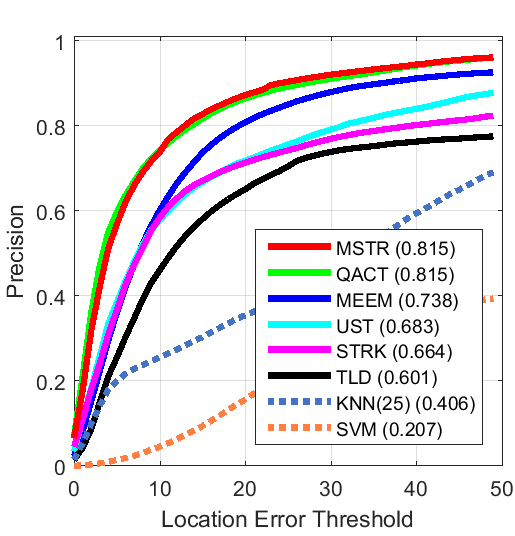

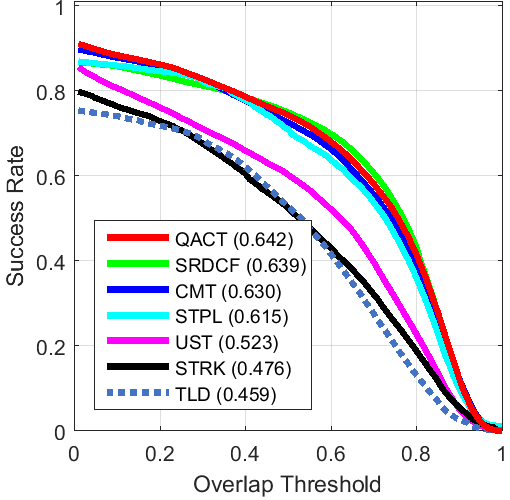

Success Plots

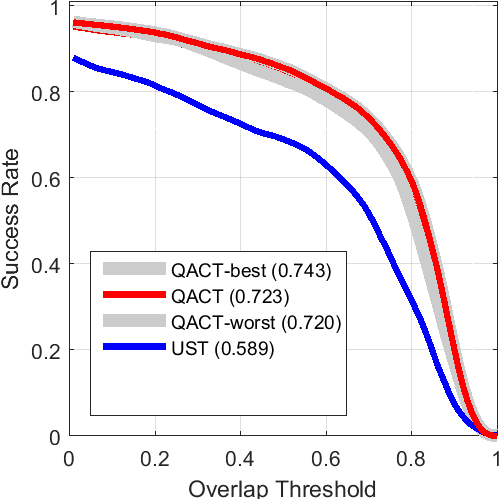

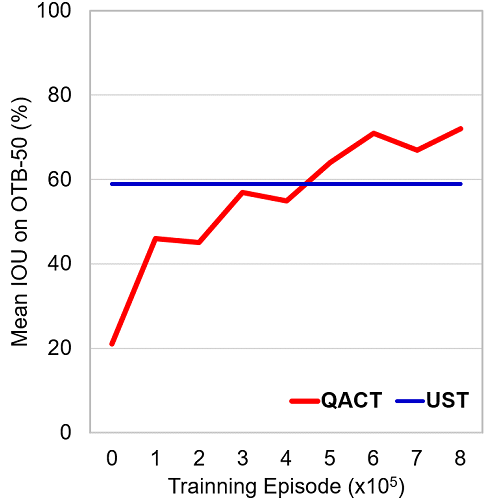

Other Experiments

BibTex reference

Download Citation , Google Scholar

@INPROCEEDINGS{meshgi2018qact,

author={Meshgi, Kourosh and Mirzaei, Maryam Sadat and Oba, Shigeyuki},

booktitle={2019 IEEE International Conference on Image Processing (ICIP)},

title={Long and Short Memory Balancing in Visual Co-Tracking using Q-Learning},

year={2019}

}

Acknowledgements

This article is based on results obtained from a project commissioned by the Japan NEDO and was supported by Post-K application development for exploratory challenges from the Japan MEXT.

For more information or help please email meshgi-k [at] sys.i.kyoto-u.ac.jp.