Active Collaboration of Classifiers for Visual Tracking

In G. Anbarjafari and S. Escalera (Eds) Human-Robot Interaction - Theory and Application, InTech Publication

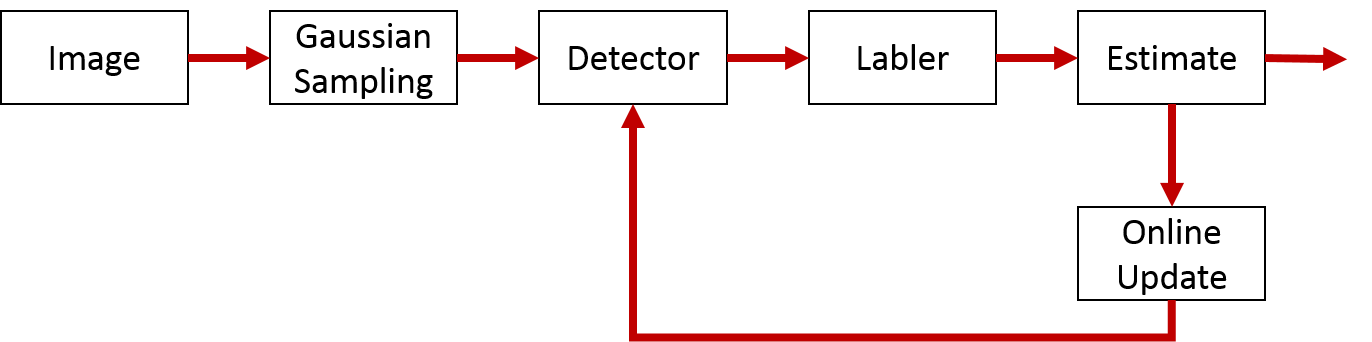

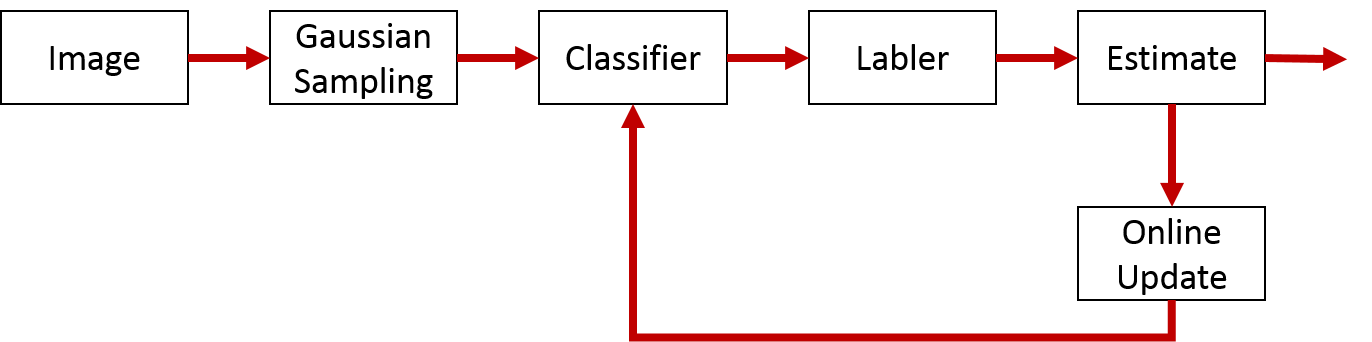

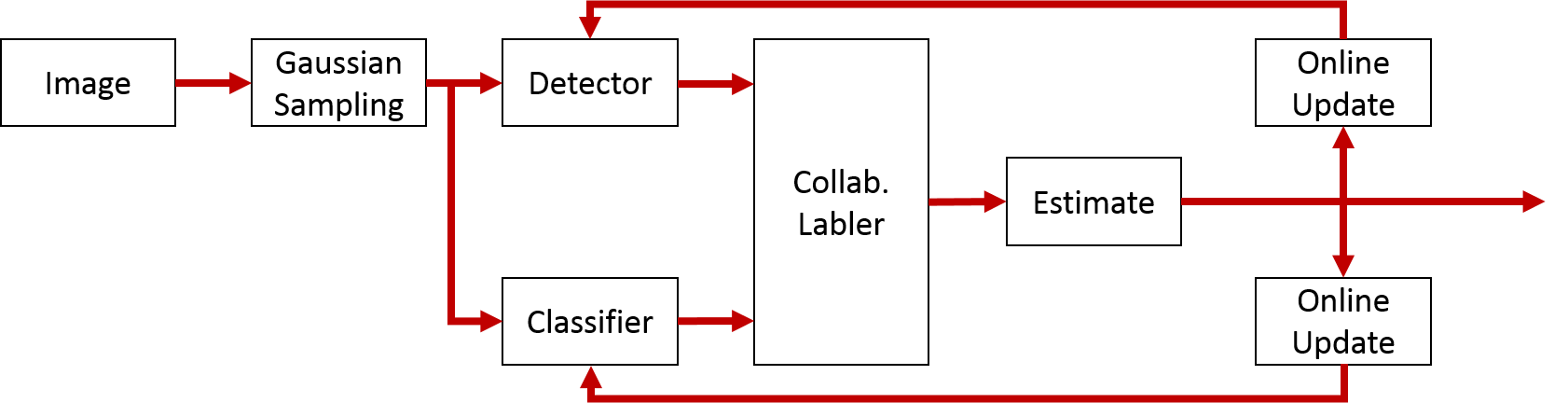

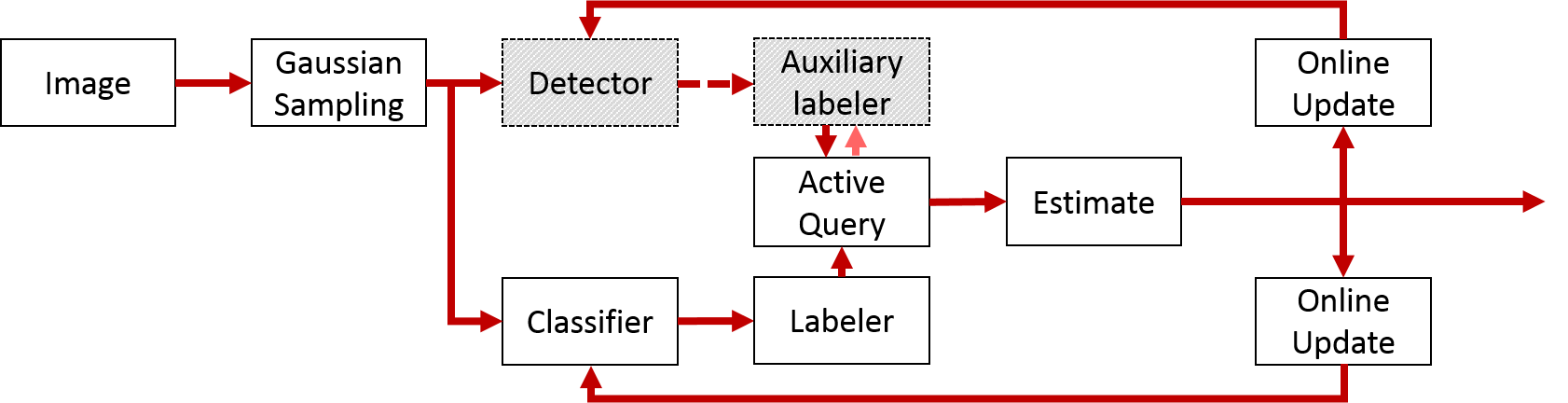

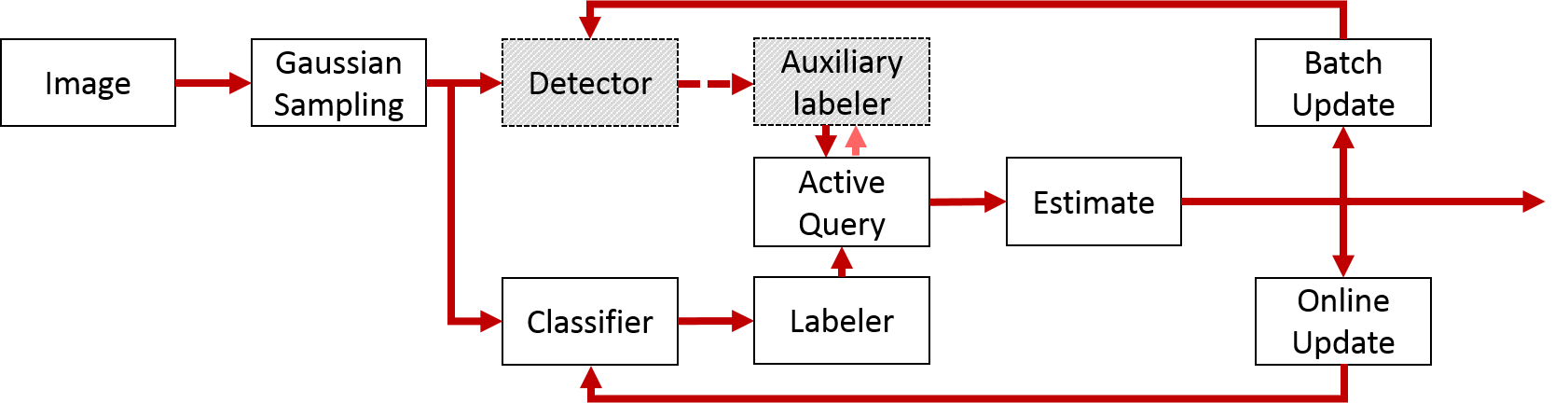

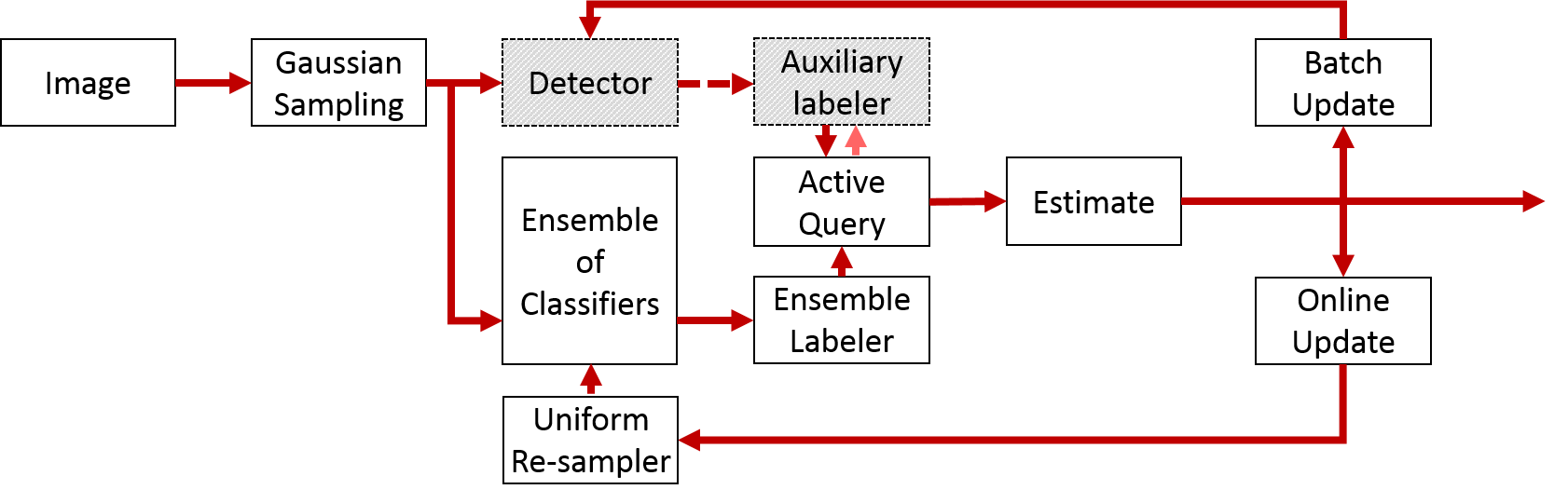

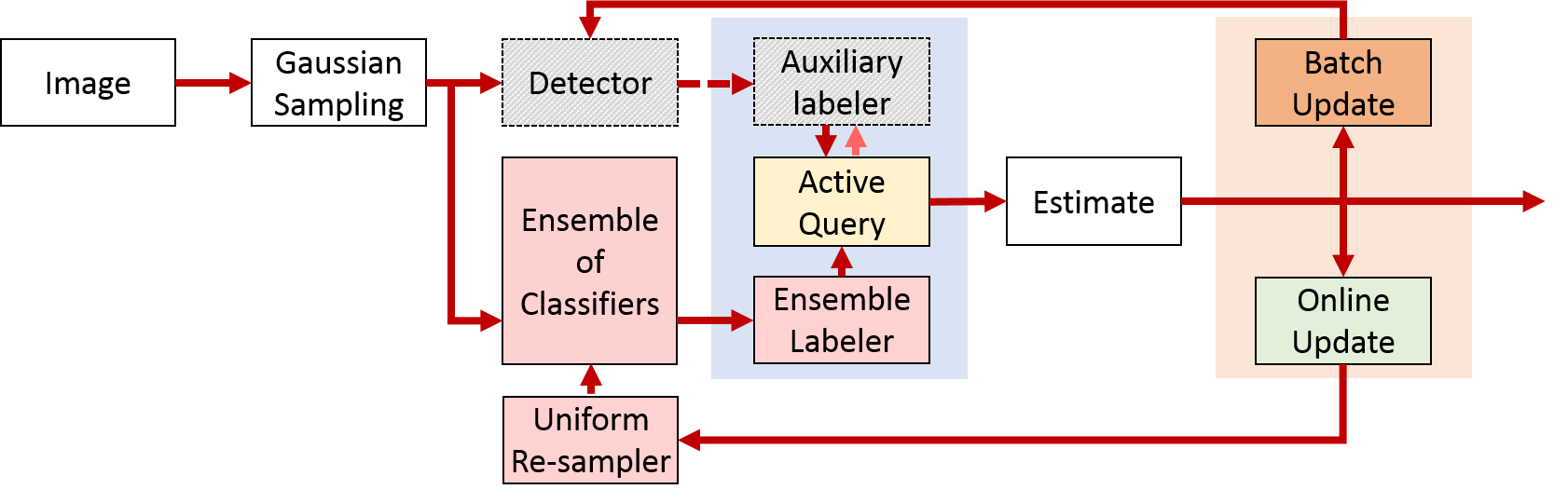

Recently, discriminative visual trackers obtain state-of-the-art performance, yet they suffer in the presence of different real-world challenges such as target motion and appearance changes. In a discriminative tracker, one or more classifiers are employed to obtain the target/non-target label for the samples, which in turn determine the target's location. To cope with variations of the target shape and appearance, the classifier(s) are updated online with different samples of the target and the background. Sample selection, labeling and updating the classifier is prone to various sources of errors that drift the tracker. In this study we motivate, conceptualize, realize and formalize a novel active co-tracking framework, step-by-step to demonstrate the challenges and generic solutions for them. In this framework, not only classifiers cooperate in labeling the samples, but also exchange their information to robustify the labeling, improve the sampling, and realize efficient yet effective updating. The proposed framework is evaluated against state-of-the-art trackers on public dataset and showed promising results.

Overview

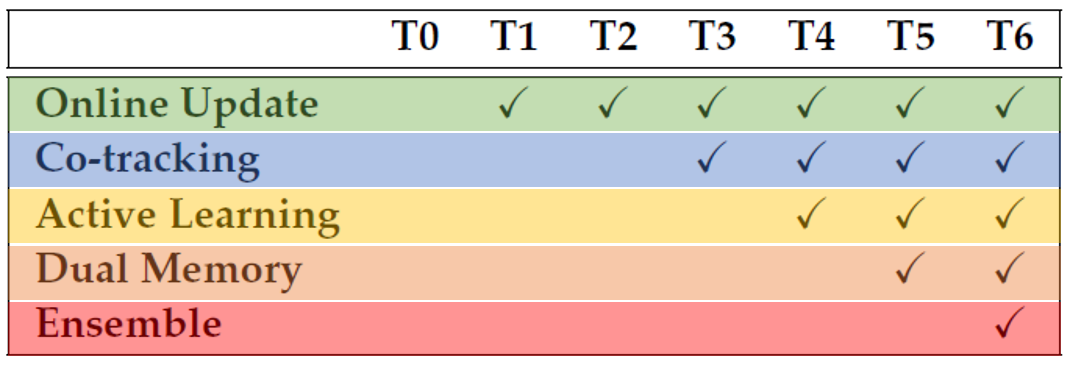

In this study we motivate, conceptualize, realize and formalize a novel co-tracking framework. First, the importance of such system is demonstrated by a recent and comprehensive literature review (Bib entries are provided for convenience). Then a discriminative tracking framework is formalized to be evolved to a co-tracking by explaining all the steps, mathematically and intuitively. We then construct various instances of the proposed co-tracking framework, to demonstrate how different topologies of the system can be realized, how the information exchange is optimized, and how different challenges of tracking (e.g., abrupt motions, deformations, clutter) can be handled in the proposed framework. Active learning will be explored in the context of labeling and information exchange of this co-tracking framework to speed up the tracker's convergence while updating the tracker's classifiers effectively. Dual memory is also proposed in the co-tracking framework to handle various tracking scenarios ranging from camera motions to temporal appearance changes of the target and occlusions.

Trackers introduced in this chapter:

This chapter provides a step-by-step tutorial for creating an accurate and high-performance tracking-by-detection algorithm out of ordinary detectors, by eliciting an effective collaboration among them. The use of active learning in junction with co-learning enable the creation of a battery of tracker that strive to minimize the uncertainty of one classifier by the help of another. Finally, we proposed to employ a committee of classifiers, each trained incrementally on a randomized portion of the latest obtained training samples, to enhance the discriminative power of the tracker.

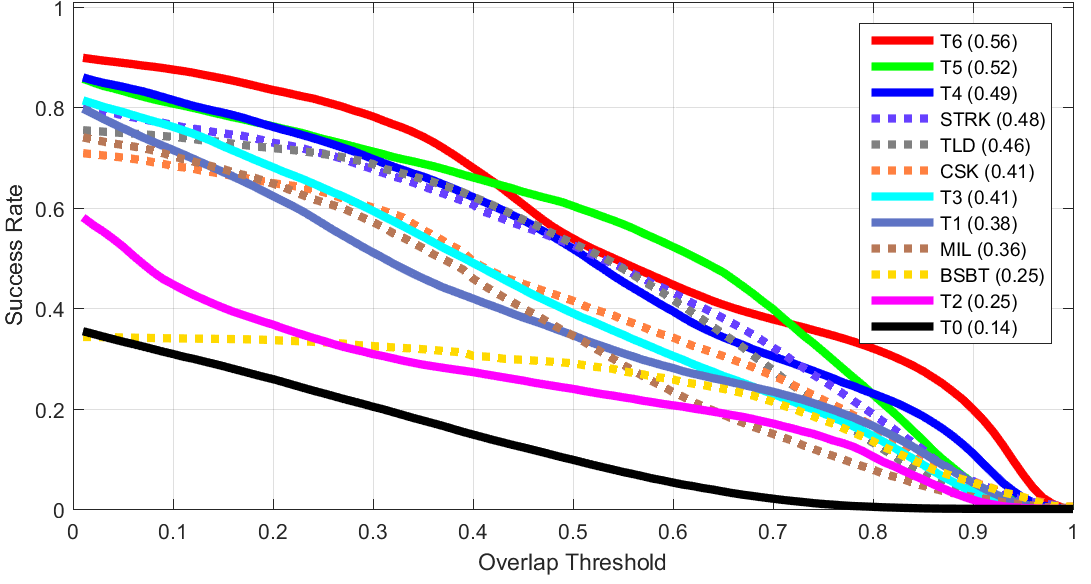

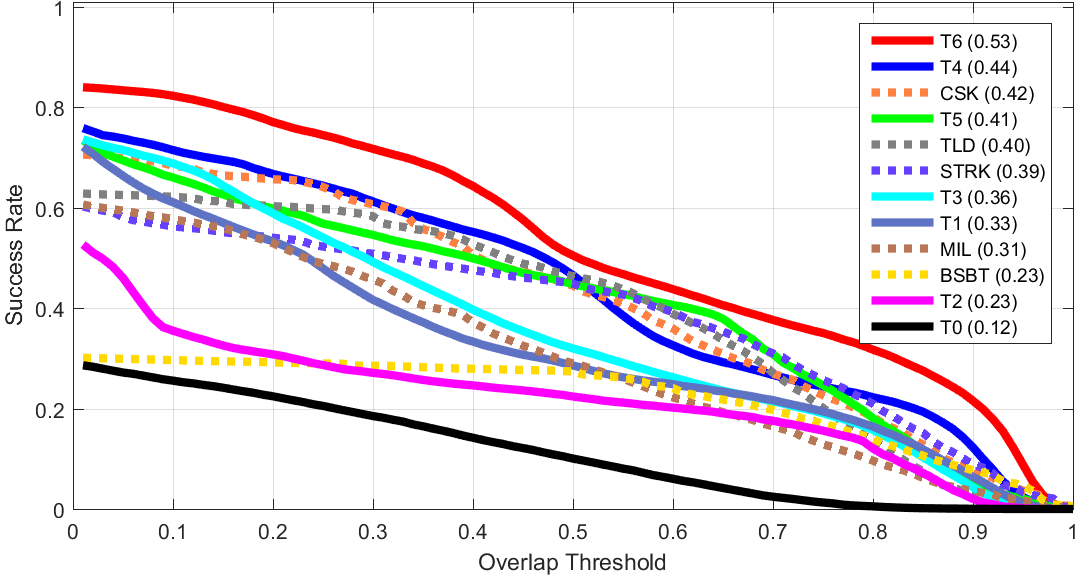

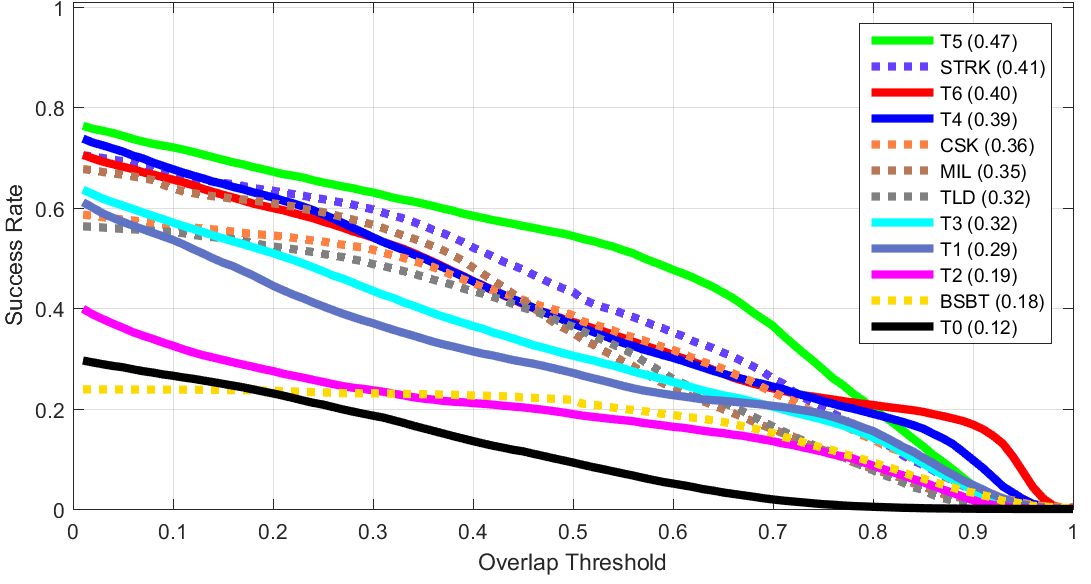

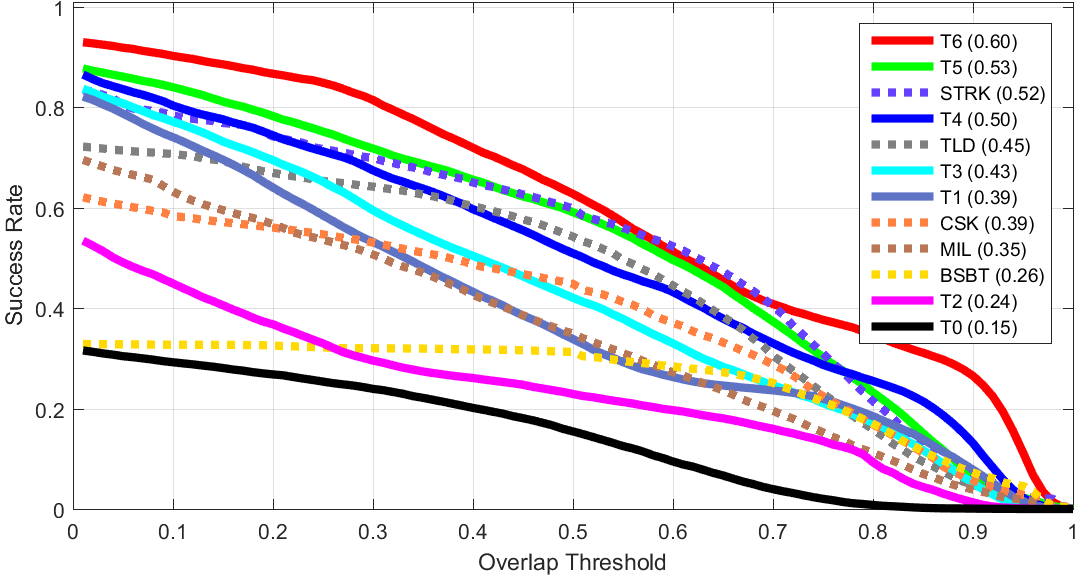

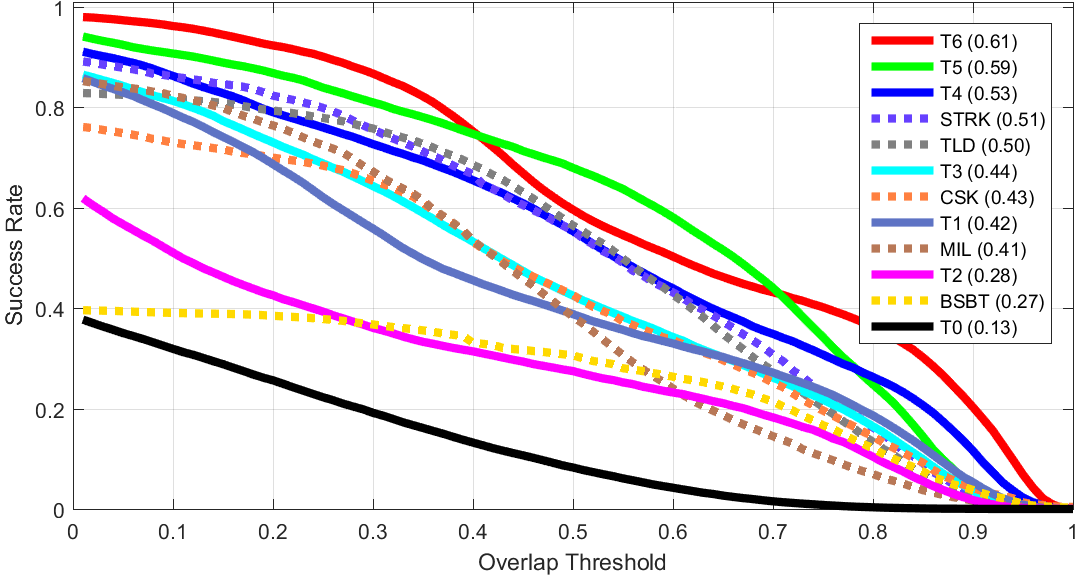

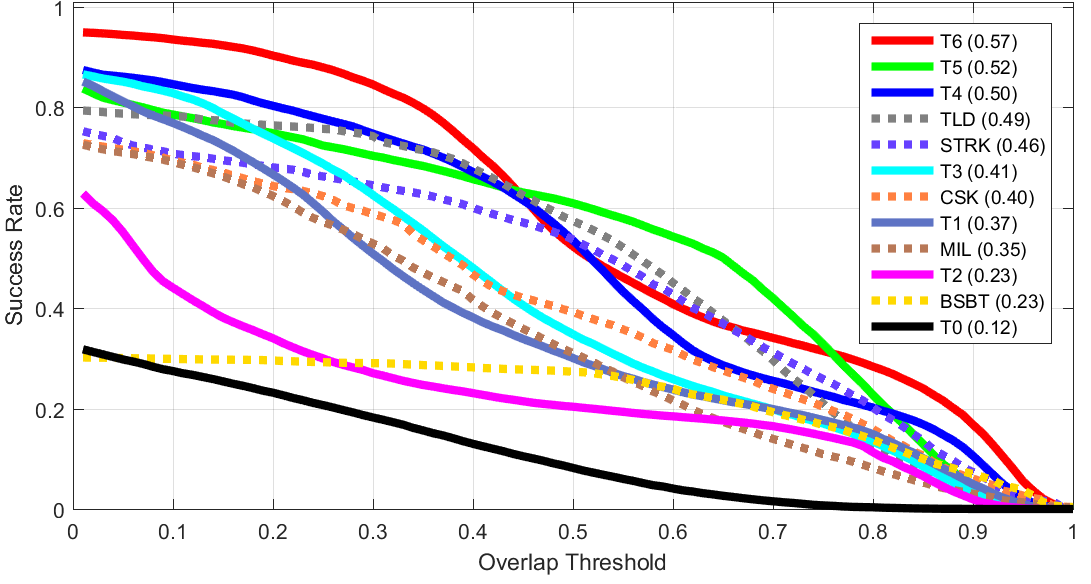

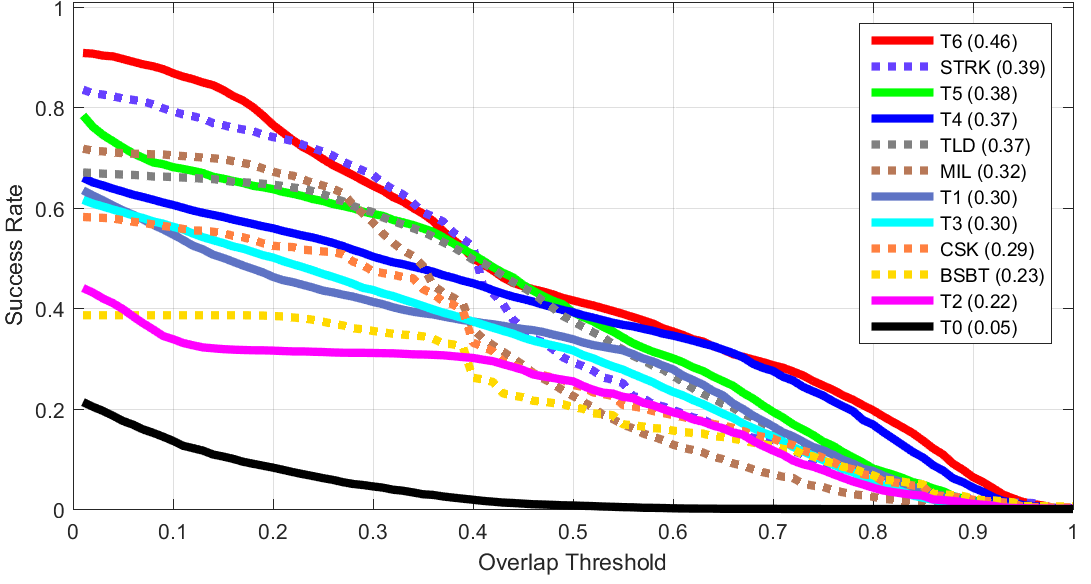

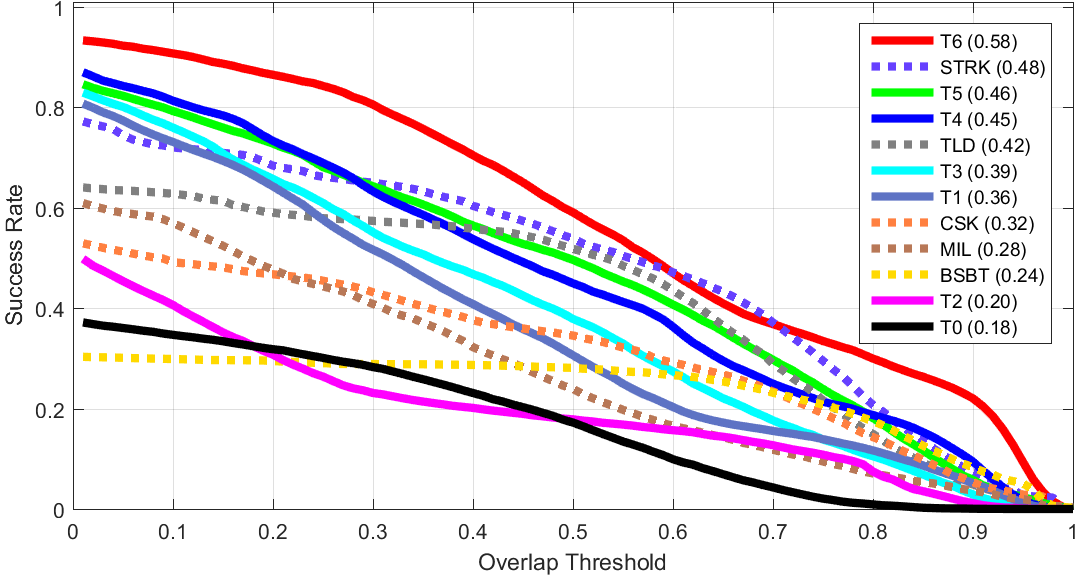

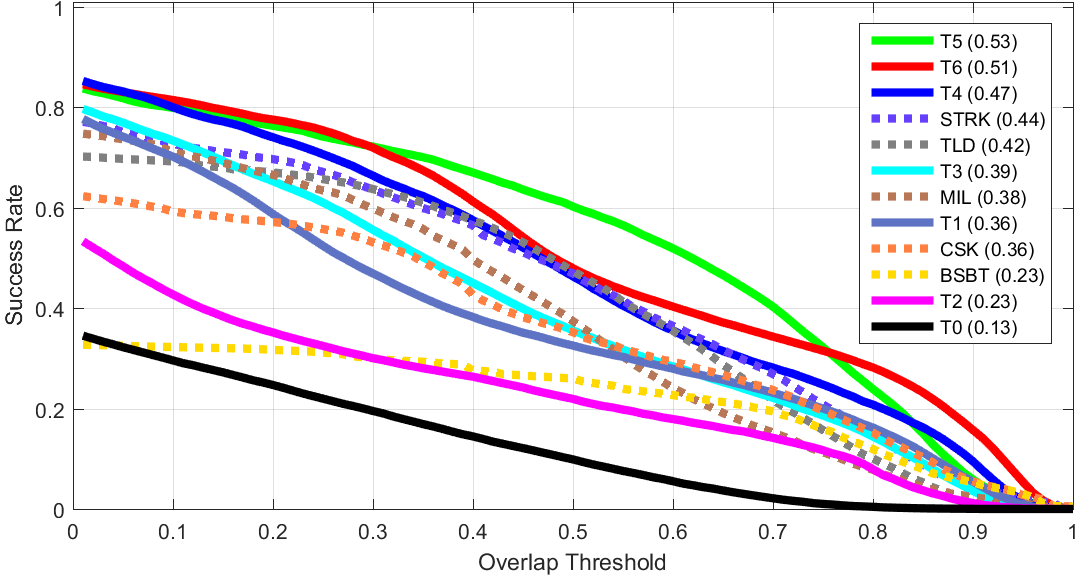

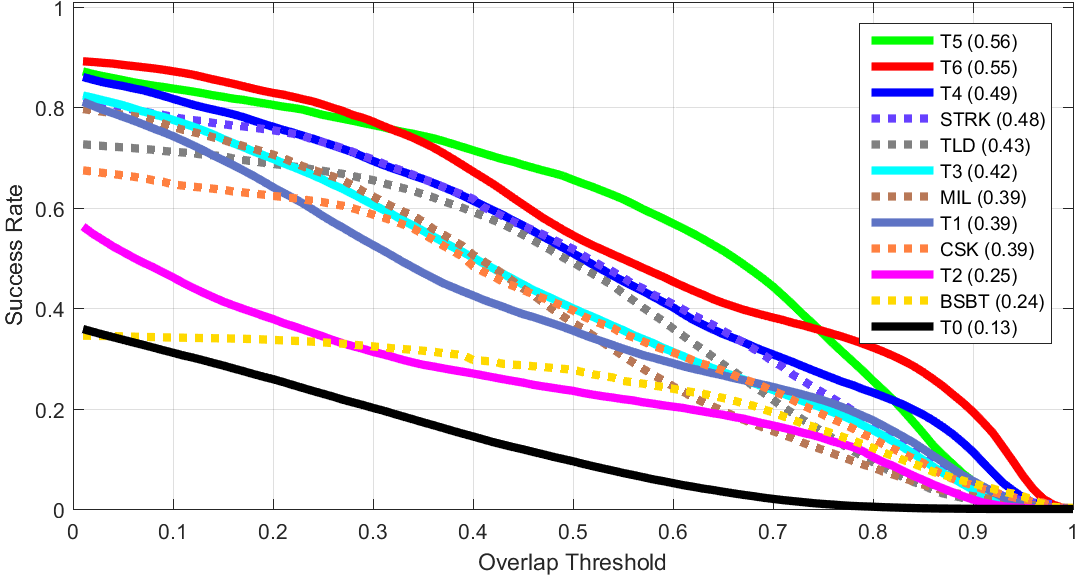

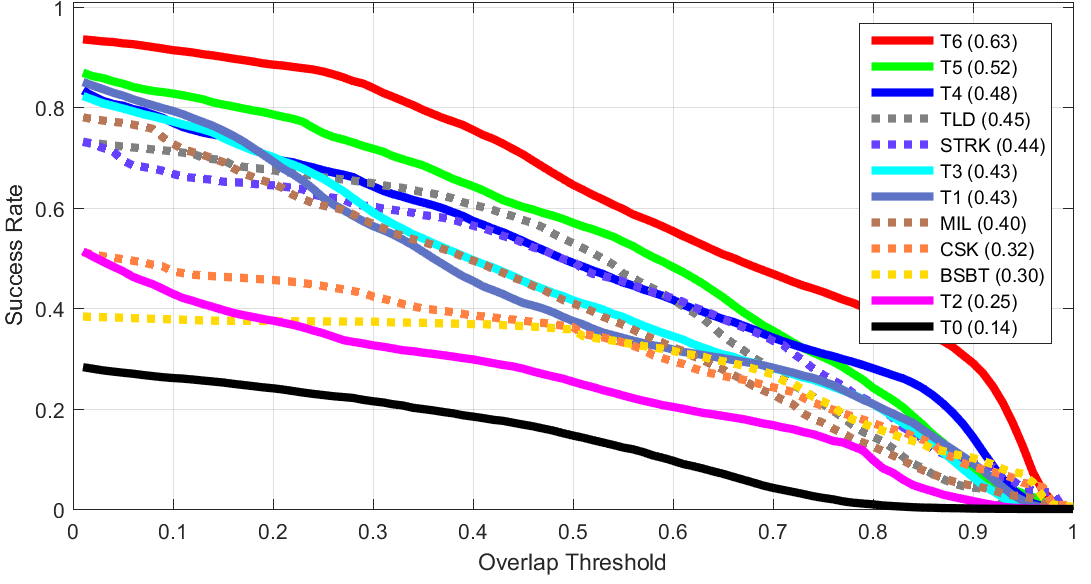

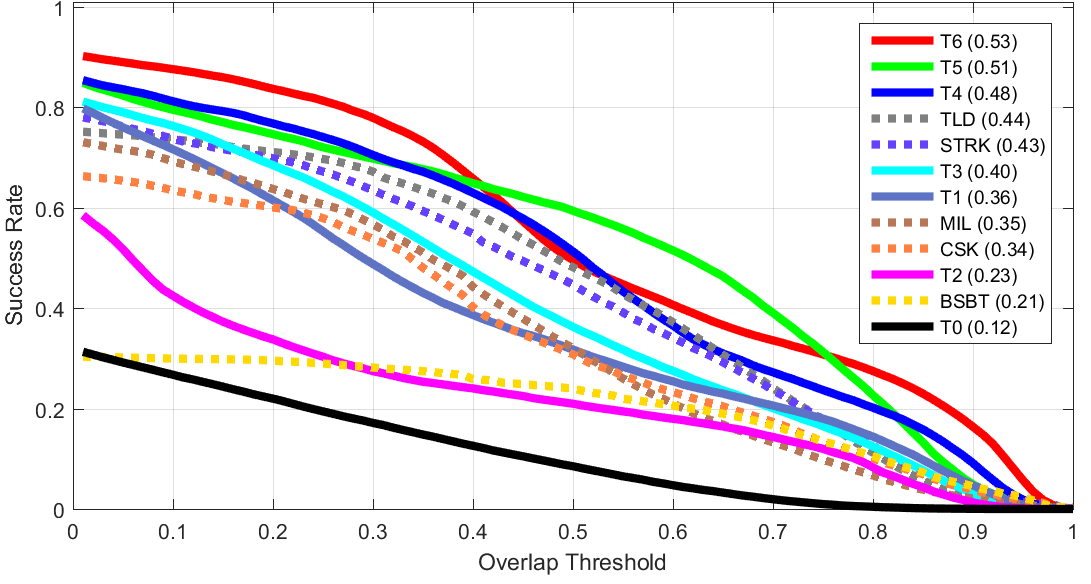

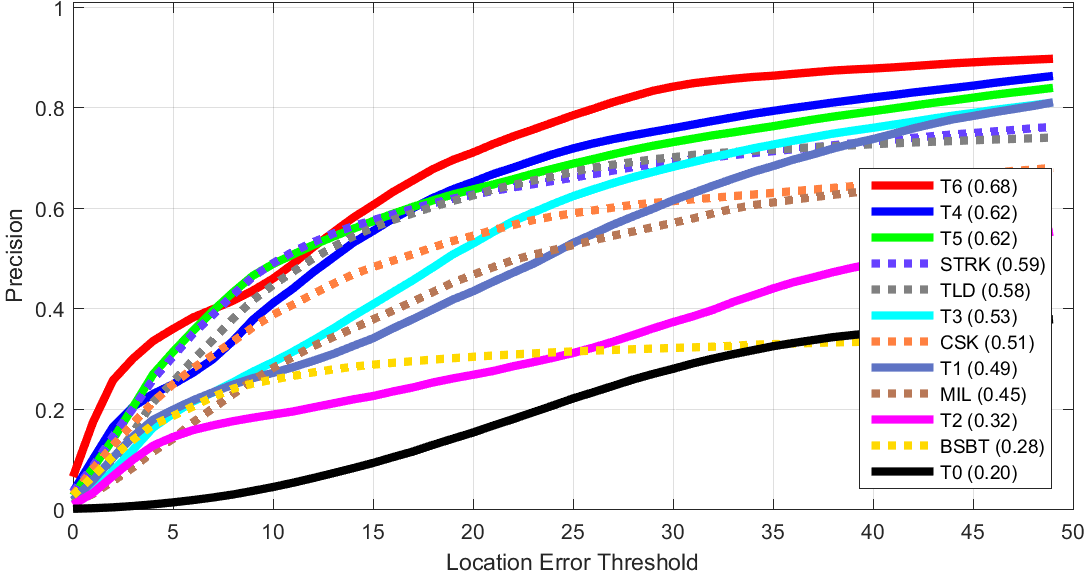

Success Plots

BibTex reference

Download Citation , Google Scholar

@Book{meshgi2017act,

Title = {Active Collaboration of Classifiers for Visual Tracking},

Author = {Meshgi, Kourosh and Oba, Shigeyuki},

Editor = {Anbarjafari, Gholamreza and Escalera, Sergio},

Publisher = {Springer},

Year = {2017},

ISBN = {978-953-51-5611-6}

}

Acknowledgements

This article is based on results obtained from a project commissioned by the Japan NEDO and was supported by Post-K application development for exploratory challenges from the Japan MEXT.

For more information or help please email meshgi-k [at] sys.i.kyoto-u.ac.jp.