Adaptive Correlation Filters for Visual Tracking via Reinforcement Learning

ECCV'2018, Munich, Germany, Sep 8-Sep 14, 2018

Visual object tracking remains a challenging computer vision problem with numerous real-world applications. Recently, Discriminative Correlation Filter (DCF)-based methods have achieved the state-of-the-art with this problem. The learning rate with the DCF is typically fixed, regardless of the situation. However, this rate is important for robust tracking, insofar as natural videos include a variety of dynamical changes, such as occlusion, out-of-plane rotation, and deformation. In this work, we propose a dynamic method of determining the learning rate by means of reinforcement learning, in which we train a function for an image patch that outputs a situation-dependent learning rate.We evaluated this method with the open benchmark, OTB-2015, and found that it outperformed the original DCF tracker. Our results demonstrate the advantages of so-called meta-learning with DCF-based visual object tracking.

Overview

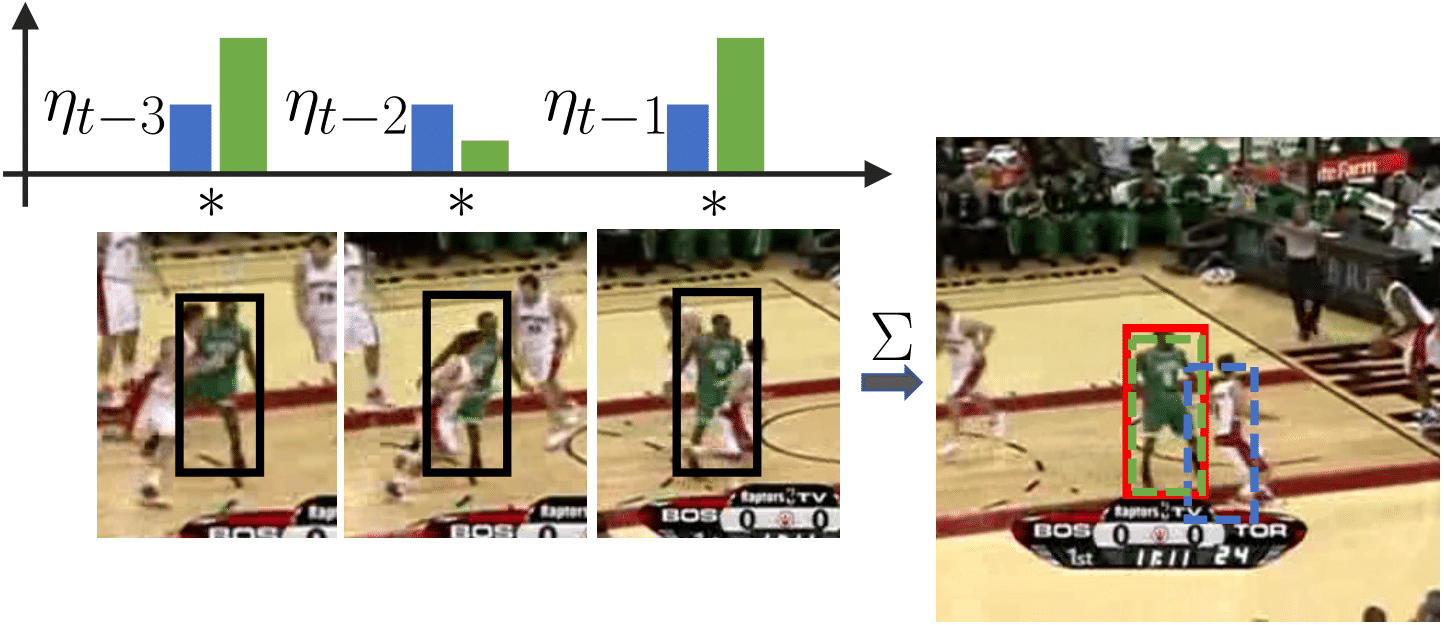

Because natural videos contain an abundance of dynamical changes in the foreground and background, dynamical usage of features collected by the tracker from past and current frames can be exploited, although this topic has not been fully discussed. In this work, we establish the advantages of adaptive updates to the parameter for training a DCF-based tracker. DCF learning is self-contained. When a typical DCF tracker takes a new input patch at time $k = t-i$ ($i = 1,2,3$), it assigns a prefixed learning rate $\eta_{t-i}$, here shown as the blue bar. When the target has been occluded, however, this strategy can fail to track at time $k = t$, due to over-fitting. On the other hand, an adaptive learning rate (shown as the green bar) avoids such tracking failures. Here, the red bounding box denotes the ground-truth, a green dashed bounding box is a possible prediction by a typical DCF tracker, and the blue dashed bounding box represents a DCF tracker with an adaptive learning rate. For this adaptive learning rate, we propose using reinforcement learning as meta-learning in a DCF-based tracker.

The following video shows tracking with a typical DCF-based tracker (viz., BACF in the blue bounding box). The tracker has difficulty achieving good performance. Here, we conducted a simple experiment. First, the learning rate parameter $\eta$ was established by BACF. Subsequently, for each frame of the video clip, we manually tuned the learning rate parameter before the model update We then found some manually tuned learning rates, with which the tracker achieved much better performance (red bounding box). This observation encourages the idea that adaptive tuning of update learning rate will improve the performance of the tracker.

Tracking results of BACF with fixed learning rate (blue) and a manually-adjusted learning rate (red). Here, black indicates the ground truth.

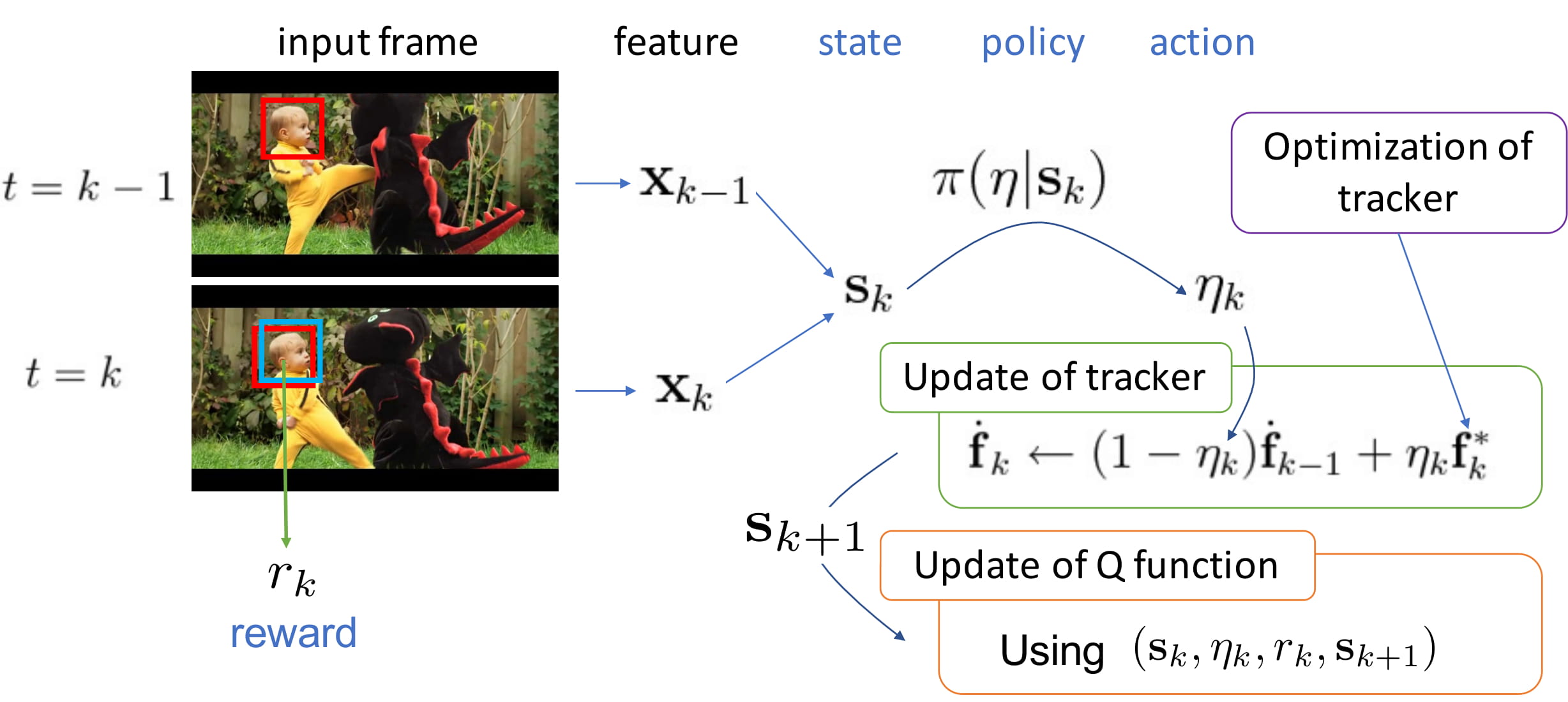

In effect, we propose meta-learning for the tracker. This meta-learning of the DCF-based tracker is based on Reinforcement Learning (RL), in which we train a Q-function that determines the value of an action at a state, so as to maximize the total reward given in a sequence of actions and states. We adopted the RL framework to the VOT problem by relating the state with the image patch and the action with the learning rate. Moreover, a reward is offered when a DCF tracker performs well with a specific video. According to RL, we can then expect that the tracker will perform well in similar dynamical situations in other videos. In addition, we used a deep neural network (DNN) to represent the Q-function. RL with a DNN was formerly proposed as a deep Q-network (DQN).

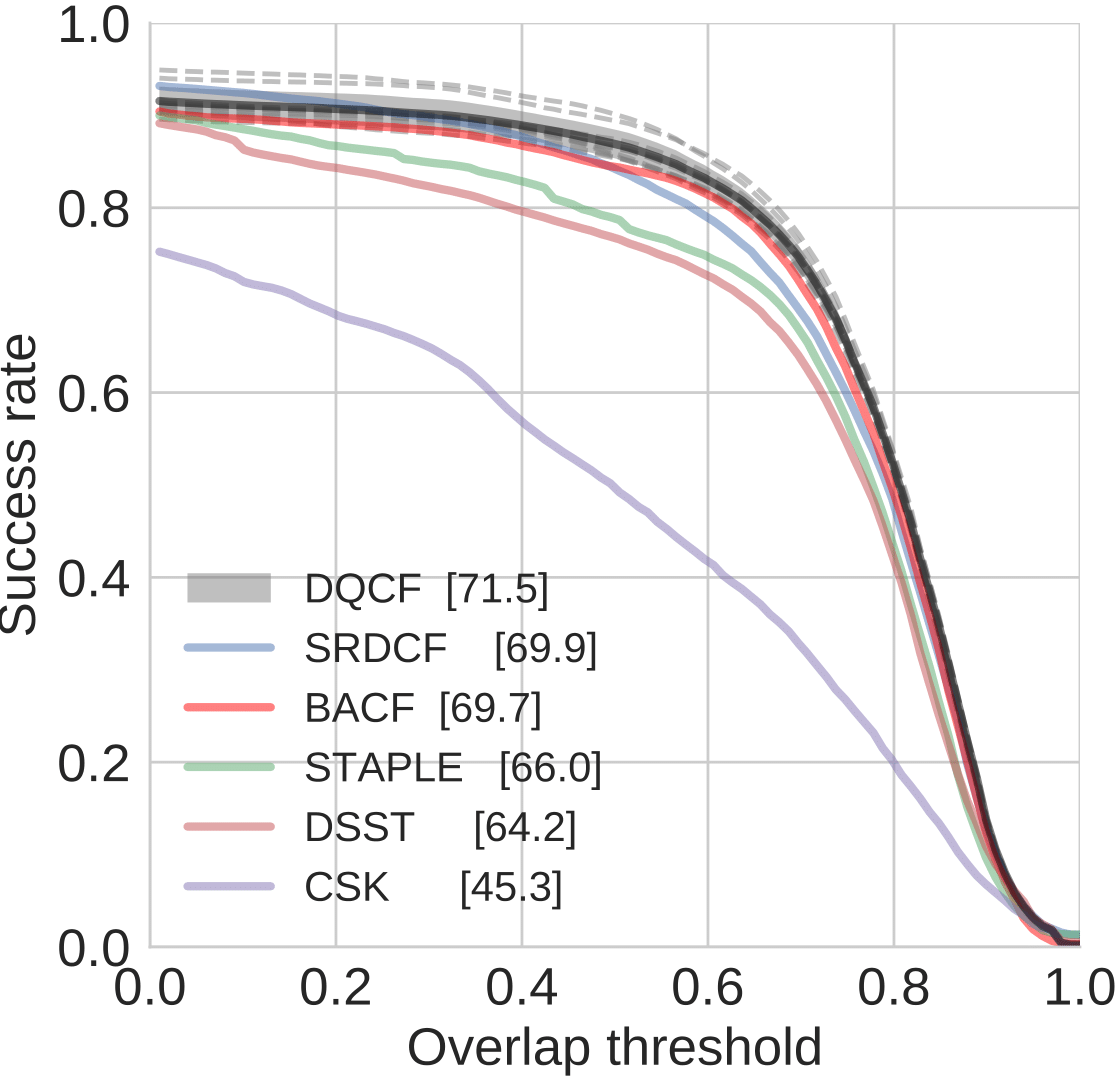

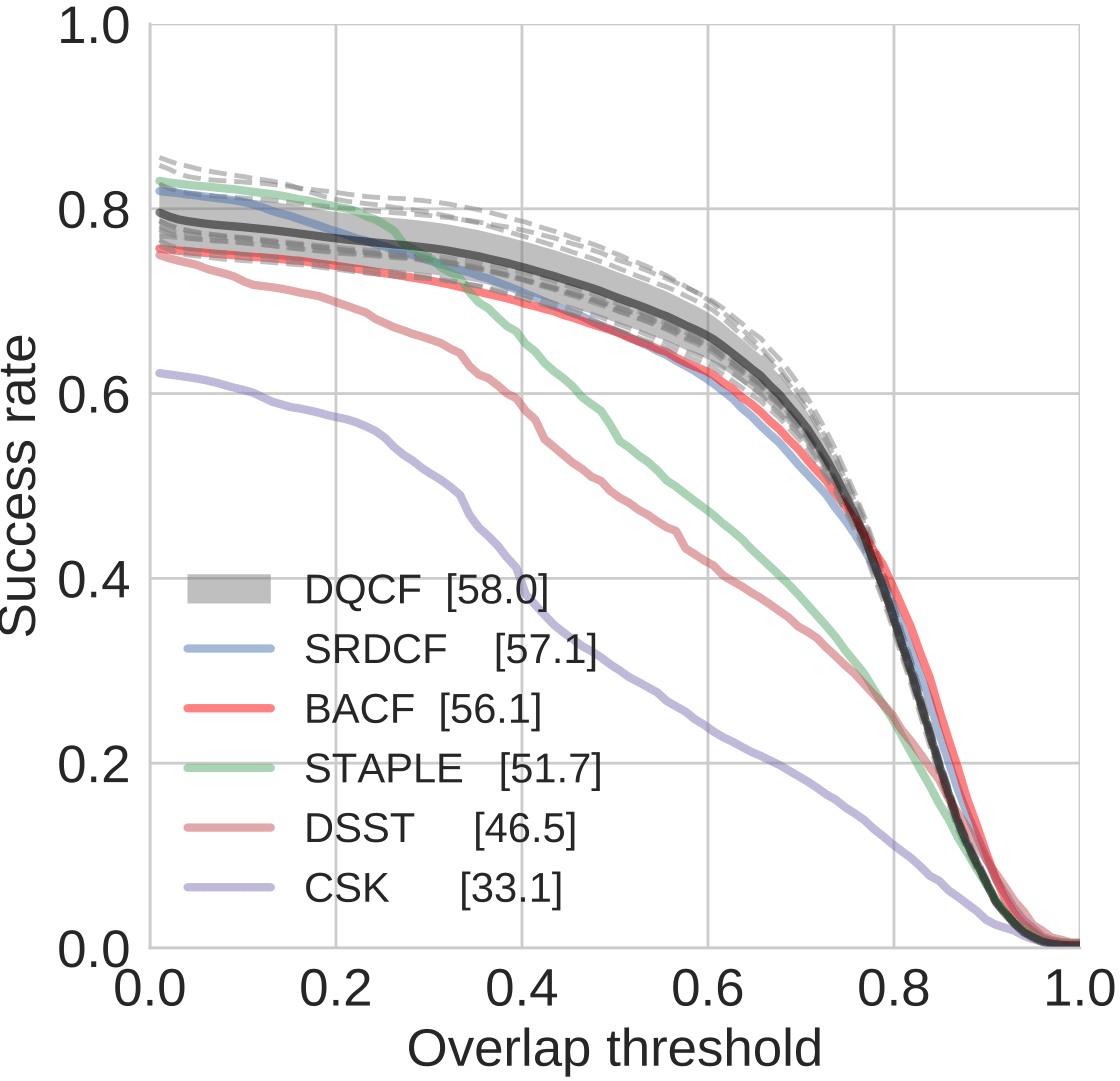

General Results

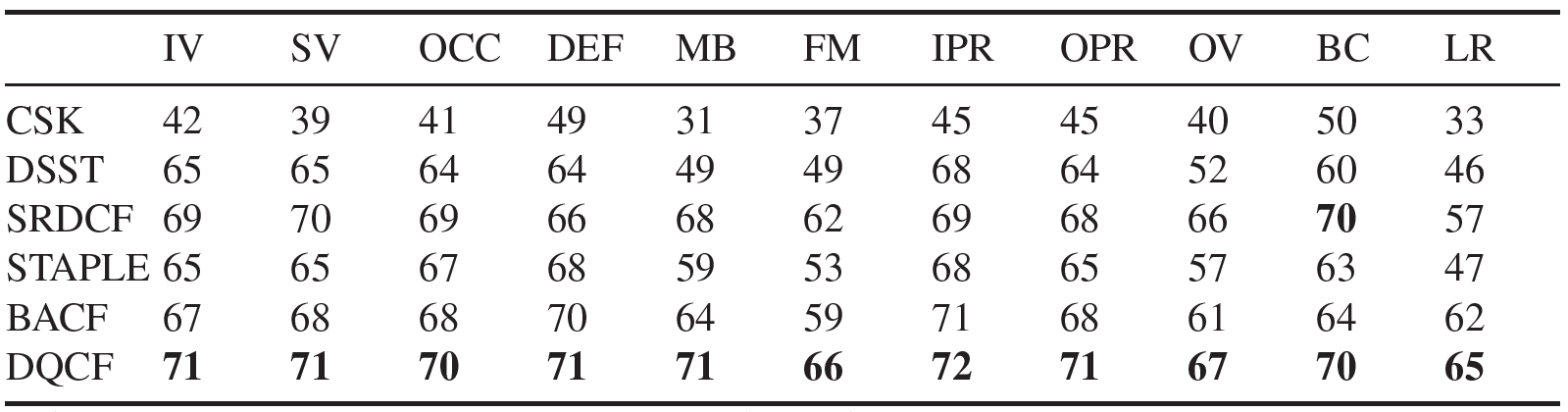

Comparison of AUCs(%) for each attribute of illumination variation (IV), scale variation (SV), occlusion (OCC), deformation (DEF), motion blur (MB), fast motion (FM), in-plane rotation (IPR), out-of-plane rotation (OPR), out-of-view (OV), background clutter (BC), and low resolution (LR), on OTB50.

Qualitative Results

Sample videos in the dataset with bounding box, of ground-truth (denoted by black), baseline method (BACF, blue), the best performance of our tracker, DQCF, in 10 time runs (green) and the worst performance of DQCF (yellow).

BibTex reference

Download Citation , Google Scholar

@article{kubo2018dqcf,

title={Adaptive Correlation Filters for Visual Tracking via Reinforcement Learning},

author={Kubo, Akihiro and Meshgi, Kourosh and Oba, Shigeyuki and Ishii, Shin},

journal={Proceeding of ECCV'2018 (submitted)},

year={2018}

}

Acknowledgements

This article is based on results obtained from a project commissioned by the Japan NEDO and was supported by Post-K application development for exploratory challenges from the Japan MEXT.

For more information or help please email kubo-a [at] sys.i.kyoto-u.ac.jp.